Lukasz Krupski, a former Tesla employee, has raised serious concerns about the safety of the company's Autopilot technology. In a recent interview with the BBC, Krupski stated that he believes the AI-powered system is not ready for public use, potentially putting road users at risk.

- Krupski claims both the hardware and software for Autopilot are not sufficiently developed for safe public road use.

- He argues that Tesla's self-driving technology essentially turns public roads into testing grounds, affecting all road users, including pedestrians.

- The whistleblower leaked internal data to German newspaper Handelsblatt in May, including customer complaints about Tesla's braking and self-driving software.

- Krupski alleges that his attempts to raise concerns internally were ignored by Tesla management.

Tesla's Response:

While Tesla has not directly addressed Krupski's claims, CEO Elon Musk recently stated on social media that "Tesla has by far the best real-world AI."

The company has consistently defended its Autopilot technology as a driver assistance feature that requires active driver supervision.

Broader Context:

Krupski's allegations come amid ongoing scrutiny of Tesla's Autopilot system:

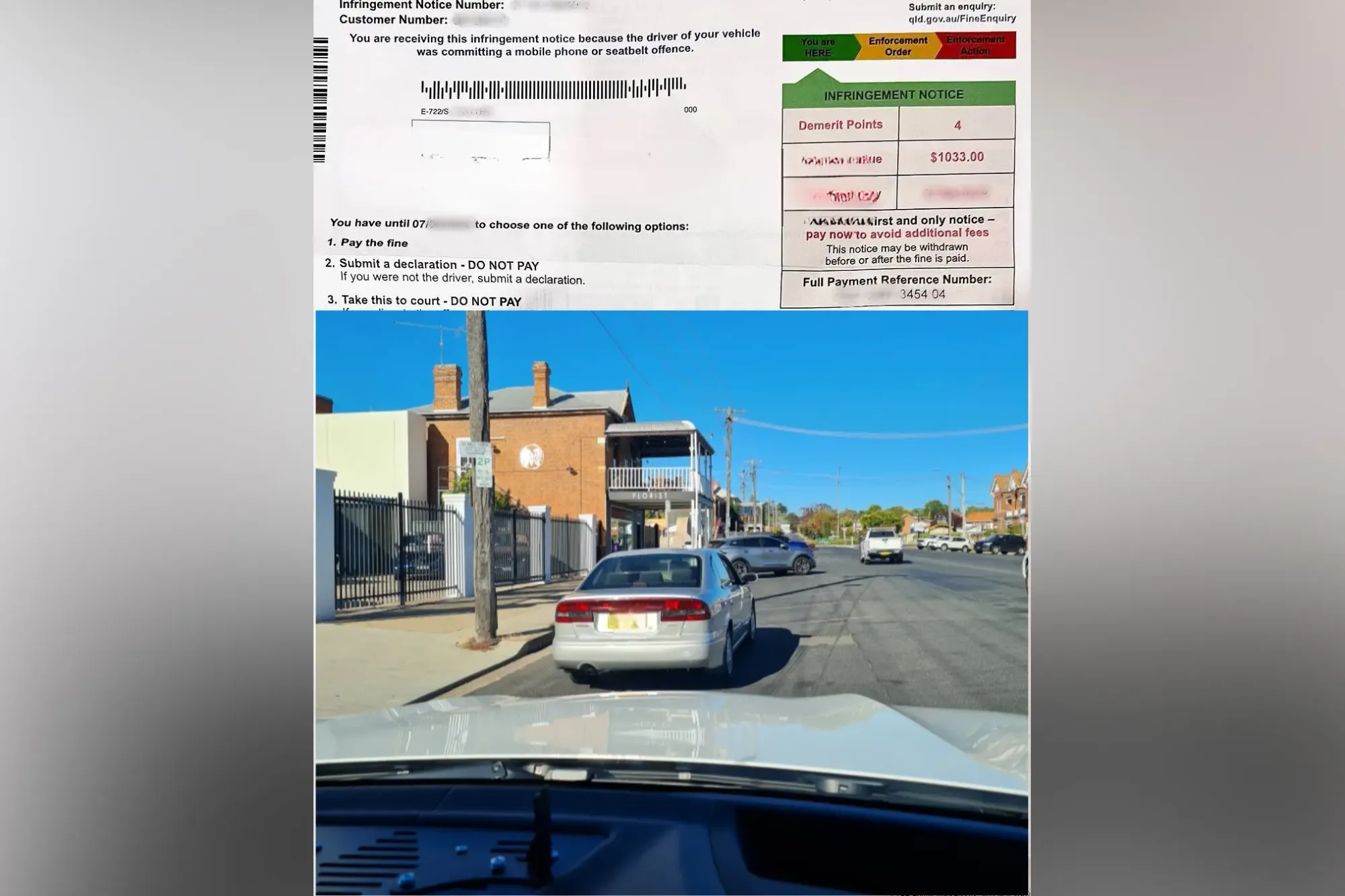

- The US National Highway Traffic Safety Administration (NHTSA) has opened multiple investigations into Tesla crashes involving Autopilot.

- A recent report found that Tesla's Autopilot was involved in at least 736 crashes since 2019, including 17 fatalities.

- Tesla faces legal challenges, including a Florida lawsuit where a judge ruled there is "reasonable evidence" that Tesla knew about dangerous Autopilot defects.

As Tesla continues to expand its Full Self-Driving beta program, Krupski's whistleblower account adds to the ongoing debate about the safety and regulation of autonomous driving technologies. The incident highlights the need for transparent communication between automakers, regulators, and the public regarding the capabilities and limitations of self-driving systems.